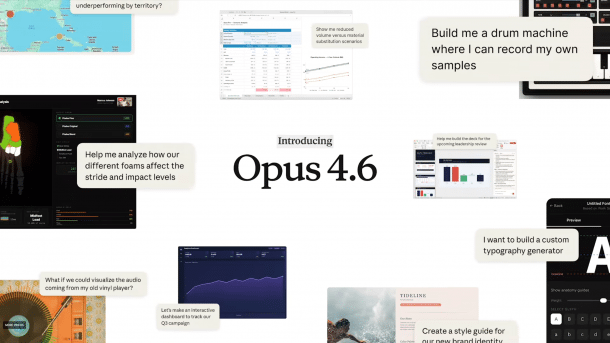

Wedoany.com Report on Feb 6th, AI company Anthropic recently launched Claude Opus 4.6, the latest version in its Opus series. This AI model shows significant improvement in handling programming tasks and, for the first time, offers a million-token context window, which is currently in a testing phase. Opus 4.6 also introduces an agent team collaboration mechanism, allowing multiple Claude Code instances to work in parallel to tackle complex challenges.

The agent team feature is a core innovation of Claude Code, currently offered as a research preview. Multiple independent Claude Code instances can run simultaneously and coordinate with each other, similar to the Codex application recently released by OpenAI. A primary session is responsible for overall coordination, task assignment, and result aggregation. Team members have their own context windows, can communicate directly, and access a shared task list, supporting parallel processing of different problems.

This feature is enabled by setting the environment variable CLAUDE_CODE_EXPERIMENTAL_AGENT_TEAMS=1. Since each instance is billed separately, using agent teams incurs higher token costs, making it suitable for complex collaborative scenarios requiring multi-angle analysis or parallel solutions. Unlike agent teams, sub-agents operate within a single session and only return results to the delegating agent, making them more suitable for focused, single tasks.

Opus 4.6 also comes equipped with other new features: a context compression function can summarize old information to free up space; an adaptive thinking function can automatically extend thinking time when handling complex tasks; developers can choose from four effort levels to control computational overhead; and the maximum output length has been increased to 128,000 tokens.

According to benchmark results published by Anthropic, Opus 4.6 performs exceptionally well in multiple evaluations: it achieved the highest score in the agent programming test Terminal-Bench 2.0; ranked among the top in the reasoning test Humanity's Last Exam; and in the economic task evaluation GDPval-AA test, it scored 144 Elo points higher than OpenAI's GPT-5.2 and 190 Elo points higher than its predecessor Opus 4.5.

In handling long contexts, Opus 4.6 achieved a 76% success rate in the MRCR v2 8-needle 1M test, compared to only 18.5% for Sonnet 4.5. The BigLaw benchmark awarded the model its highest score of 90.2%, the highest rating achieved by the Claude series to date—40% of answers were perfect, and 84% scored no lower than 0.8.

In terms of safety, Opus 4.6 is on par with other cutting-edge models, with a low rate of misaligned behavior, showing the same level of alignment as Opus 4.5 but with a lower rate of over-refusal. For cybersecurity, Anthropic developed six new test scenarios, and the model meets the ASL-3 standard.

Pricing is set at $5 per million input tokens and $25 per million output tokens. For advanced requests exceeding 200,000 tokens, the prices are adjusted to $10 and $37.50, respectively. In the future, if inference runs entirely in the US, customers will incur a 10% surcharge. Updated on February 5, 2026: Added relevant information about the release of OpenAI GPT-5.3 Codex.