Wedoany.com Report on Feb 12th, As artificial intelligence technology accelerates its transition from the laboratory to the real economy, the industry's core focus is shifting from model scale to response speed. Inference latency has become a key bottleneck constraining the large-scale application of AI, especially in scenarios requiring real-time interaction and decision-making such as software development, enterprise operations, and industrial control, where millisecond-level latency differences directly determine whether the technology can be truly implemented.

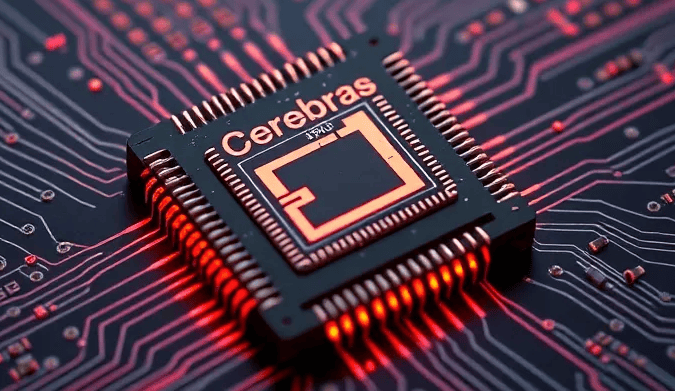

As an innovative company focused on AI computing architecture, Cerebras is gaining a first-mover advantage in this round of "speed race" with its unique wafer-scale chip design. In early 2026, the company completed a $1 billion Series H financing round, bringing its total funding to nearly $3 billion, and its valuation climbed to approximately $23 billion, reflecting the capital market's growing recognition of its technological path.

In a recent public interview, Cerebras CEO Andrew Feldman pointed out: "AI has gone from being something 'you try once and think it's cool' to a foundational tool 'you use 25 times a day'. When that transition happens, a 30-second response wait is unacceptable." He emphasized that inference speed is becoming a core variable determining economic feasibility and operational safety in agentic AI, real-time decision systems, and even industrial automation scenarios.

Cerebras's technological path is fundamentally different from traditional GPU clusters. The company integrates a data-center-scale computing architecture onto a single wafer-scale chip, with a single chip the size of a dinner plate, completely eliminating the switches and cables used to connect multiple chips in traditional systems. Feldman explained: "We built the largest chip in history. By eliminating the redundant latency caused by inter-chip communication, we have achieved orders of magnitude speed improvements in both training and inference." This design philosophy originated from a strategic judgment in 2016—the future of AI computing depends not only on computing power scale but also on the efficiency of data flow inside and outside the chip.

In the critical area of inference, which determines the practical usability of AI, Cerebras has established a clear competitive advantage. Feldman candidly stated: "Right now, there's no other benchmark we need to prove anything other than 'we're the fastest at inference'. It's a very nice place to be." As AI systems gradually embed themselves into real-time-sensitive scenarios like industrial production, code writing, and automated decision-making, inference speed is evolving from a technical metric into a commercial moat.

Looking ahead, Feldman believes AI is completing a transition from an "innovative tool" to "infrastructure." "Fast AI will change everything," he said, "Just as electricity went from a luxury to a necessity, the speed of AI will reshape the underlying logic of global economic operations." This judgment also reveals that the core of the next phase of AI industry competition will shift from "who can build bigger models" to "who can run intelligent services with lower latency and higher efficiency."