Researchers from the Italian Institute of Technology (IIT) and the University of Aberdeen have achieved significant results, bringing new hope for enhancing robots' spatial reasoning abilities. They propose a new conceptual framework and a dataset containing computationally generated data for training vision-language models (VLMs) to perform spatial reasoning tasks. The results are published as a preprint on the arXiv server and are expected to advance embodied artificial intelligence (AI) systems in real-world navigation and human communication.

This research is an outcome of the FAIR* project, stemming from collaboration between IIT's Social Cognition in Human-Robot Interaction (S4HRI) research line (supervised by Professor Agnieszka Wykowska) and the University of Aberdeen's Action Prediction Lab (led by Professor Patric Bach).

IIT technical expert and co-senior author Davide De Tommaso stated that the research group focuses on applying social cognition mechanisms in human-AI interaction. Previous studies have shown that under certain conditions, people attribute intentionality to robots and interact with them as social partners. Therefore, understanding the role of non-verbal cues such as gaze, gestures, and spatial behavior is crucial for developing computational models of robot social cognition.

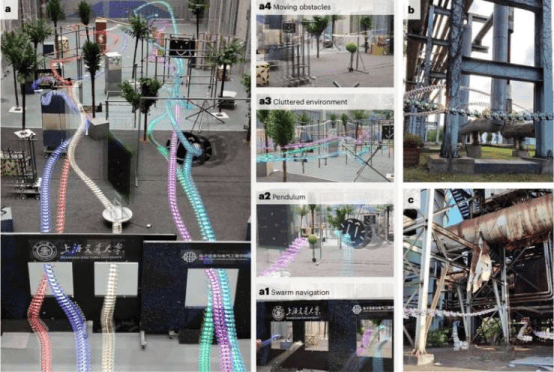

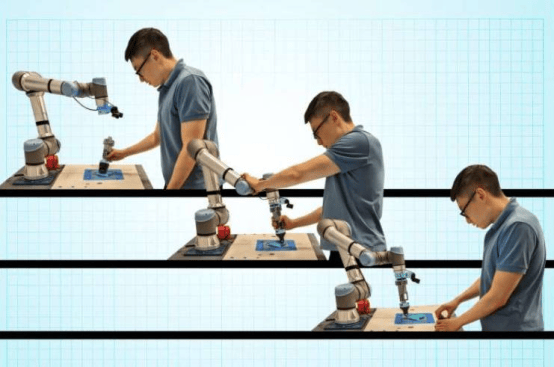

Visual Perspective Taking (VPT)—the ability to understand a visual scene from another's viewpoint—is of great significance for robotic systems, helping them comprehend instructions and collaborate with other agents on tasks. De Tommaso said the primary goal is to enable robots to effectively reason about what other agents perceive in a shared environment, such as assessing text readability, whether objects are occluded, or if an object's orientation is suitable for human grasping. Although current foundational models lack sufficient spatial reasoning capabilities, using large language models for scene understanding and synthetic scene representations holds broad prospects for modeling human-like VPT abilities in embodied AI agents.

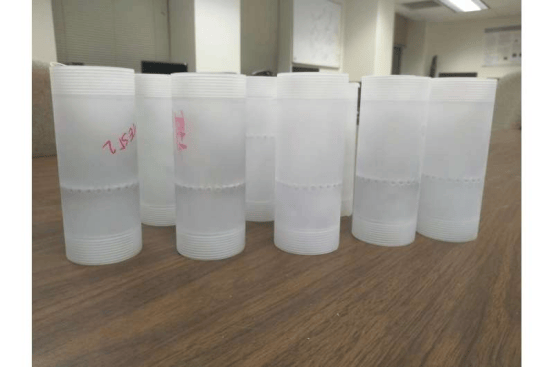

To improve VLM's VPT capabilities, the researchers compiled a dataset to support training. They used NVIDIA's Omniverse Replicator platform to create "artificial worlds" consisting of simple scenes with cubes observable from multiple angles and distances. In the simulated world, 3D images of the cubes were captured, with each image accompanied by natural language descriptions and a 4×4 transformation matrix (a mathematical structure representing the cube's position and orientation). This dataset has been released online for other teams to train VLMs.

First author Joel Currie, a PhD student at the University of Aberdeen and researcher at IIT, explained that each image captured by the virtual camera is paired with a text prompt describing the cube's size and a precise transformation matrix, allowing robots to plan movements and interact with the world. The synthetic environment is controllable and can rapidly generate large numbers of image-matrix pairs—something difficult to achieve in real-world scenarios—enabling robots not only to "see" but also to understand space.

Currently, the framework remains theoretical but opens new possibilities for training real VLMs. Researchers can use the compiled dataset or similar synthetic data to evaluate model potential. Currie said the work is essentially conceptual, proposing a new method for AI to learn space from both its own and others' perspectives, treating VPT as content that models can learn through vision and language—a step toward embodied cognition and laying the foundation for machines to achieve social intelligence.

The research by De Tommaso, Currie, Migno, and colleagues is expected to inspire the generation of more similar synthetic datasets, helping humanoid robots and other embodied AI agents improve and advance their deployment in the real world. Gioele Migno, who recently graduated in AI and robotics from Sapienza University of Rome and joined IIT's S4HRI department, said the next step will be to make virtual environments more realistic, narrowing the gap between simulation and reality—critical for transferring model knowledge to the real world and enabling embodied robots to leverage spatial reasoning. Future work will also explore how these capabilities allow robots to interact more effectively with humans in scenarios involving shared spatial understanding.