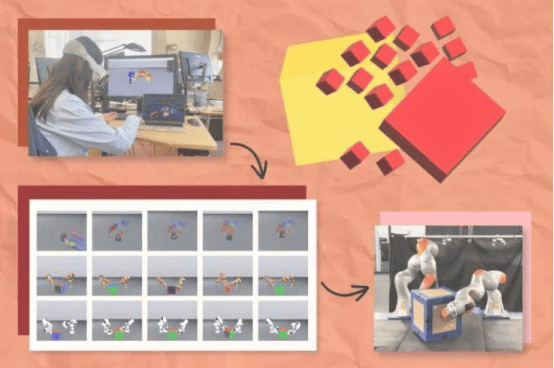

Researchers have demonstrated a new way to attack AI computer vision systems that gives them control over what the AI "sees." The study shows that a new technique called RisingAttacK can effectively manipulate all of the most widely used AI computer vision systems.

The problem involves so-called "adversarial attacks," where someone manipulates the data fed into an AI system to control what the system does or does not see in an image. For example, someone could manipulate an AI's ability to detect traffic lights, pedestrians, or other vehicles—an issue for autonomous vehicles. Or a hacker could insert code into an X-ray machine that causes the AI system to make inaccurate diagnoses.

"We want to find effective ways to break AI vision systems because these vision systems are often used in environments that can affect human health and safety—from self-driving cars to health technologies to security applications," said Tianfu Wu, co-corresponding author of the paper and associate professor of electrical and computer engineering at North Carolina State University.

"That means the security of these AI systems is critical. Identifying vulnerabilities is an important step toward making these systems secure because you have to identify vulnerabilities in order to defend against them."

RisingAttacK consists of a series of operations aimed at making the fewest possible changes to an image so that a user can manipulate what a vision AI "sees."

First, RisingAttacK identifies all of the visual features in an image. The program also runs an operation to determine which features are most important for achieving the attack's goal.

"For example," Wu said, "if the goal of the attack is to prevent the AI from recognizing a car, which features in the image are most important for the AI to recognize that there is a car in the image?"

Then RisingAttacK calculates the AI system's sensitivity to changes in the data—more specifically, the AI's sensitivity to changes in data for the key features.

"This requires some computational power, but it allows us to make subtle, targeted adjustments to the key features that successfully carry out the attack," Wu said. "The end result is that two images may look identical to the human eye—and we might even clearly see a car in both images. But because of RisingAttacK, the AI will see a car in the first image but not in the second."

"The nature of RisingAttacK means we can affect the AI's ability to recognize the top 20 to 30 targets—these could be cars, pedestrians, bicycles, parking signs, and so on."

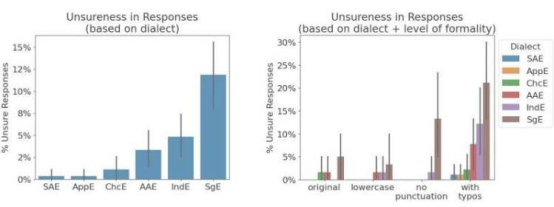

The researchers tested RisingAttacK against four of the most widely used vision AI programs (ResNet-50, DenseNet-121, ViT-B, and DEiT-B). The results show that the technique can effectively manipulate all four programs.

"While we've demonstrated RisingAttacK's ability to manipulate vision models, we are now determining how effective the technique is at attacking other AI systems, such as large language models," Wu said.

"Looking ahead, our goal is to develop techniques that can successfully defend against these kinds of attacks."

The paper "Adversarial Perturbations Are Formed by Iteratively Learning Linear Combinations of Right Singular Vectors of Adversarial Jacobian" will be presented July 15 at the International Conference on Machine Learning (ICML 2025) in Vancouver, Canada.