Wedoany.com Report on Feb 3rd, OpenAI is actively evaluating alternatives to NVIDIA's AI inference chips to meet the growing demand for computing power. During ongoing hardware partnership negotiations, the company has initiated technical discussions with chip manufacturers such as AMD and Cerebras, focusing on testing hardware solutions that can improve the inference speed of applications like ChatGPT.

Since 2023, OpenAI has gradually expanded its cooperation with chip suppliers. Although NVIDIA remains its core hardware partner, OpenAI has requested improvements regarding the latency performance of current equipment in scenarios such as code optimization and software communication. Technical documents indicate that its goal is to enable alternative solutions to handle approximately 10% of future inference workloads through diversified hardware deployment, forming a more resilient computing architecture.

"We are always committed to optimizing model operational efficiency," said the head of OpenAI's hardware division. "The current exploration covers chip solutions with different architectures, with a focus on evaluating the cost per unit of computing power and energy efficiency ratio." It is worth noting that while OpenAI has reached a cooperation agreement with Cerebras, it has not yet obtained a technology license from Groq, whereas NVIDIA has deepened its technical collaboration with Groq through chip design licensing and talent acquisition.

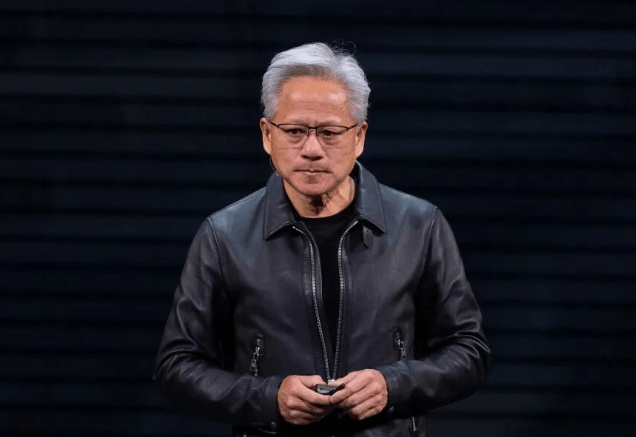

Addressing market rumors, NVIDIA CEO Jensen Huang publicly responded, stating, "The foundation of our cooperation with OpenAI is solid, and we will continue to provide hardware solutions that meet their evolving needs." According to supply chain sources, OpenAI's evaluation of alternative solutions is still in the early stages, and a large-scale adjustment to the existing hardware architecture is not expected within the next 12-18 months.