In the field of high-end equipment manufacturing, robotics is constantly pushing beyond traditional boundaries. In the past, teaching robots new skills required specialized programming knowledge, but the new generation of robots is moving toward “learning from anyone.” Now, engineers at MIT have made a major breakthrough in this area by developing a multi-functional demonstration interface (VDI), ushering in a revolutionary change for robot learning.

Innovation Breakthrough: Triple-Mode Training Interface

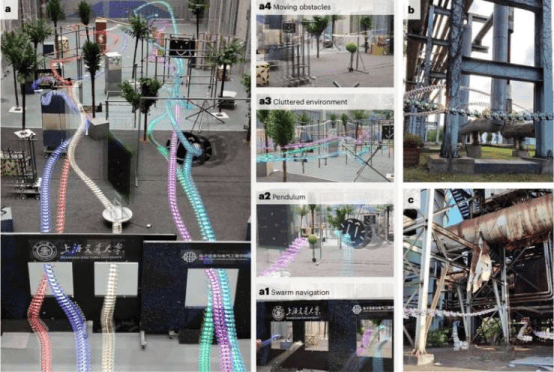

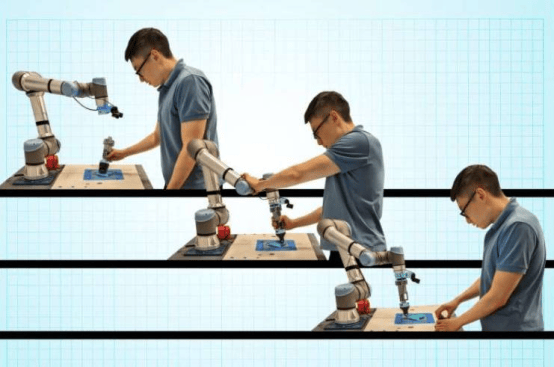

Engineers have been working to create robot assistants that can “learn from demonstrations.” Previously, robots that learn while doing mostly used one of three demonstration methods: teleoperation, physically moving the robot, or observing and imitating human actions. The MIT team broke the mold by developing a triple-mode training interface. This handheld, sensor-equipped tool can be attached to common collaborative robotic arms. Users can teach the robot by teleoperating it, physically manipulating it, or personally demonstrating the task, flexibly choosing the training method based on preference or task requirements.

Testing Validation: Demonstrating Diverse Advantages

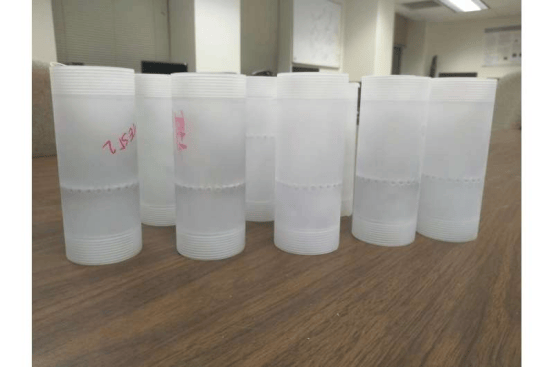

The research team tested the “multi-functional demonstration interface” on standard collaborative robotic arms. Volunteers with manufacturing expertise used the interface to perform two common manual tasks in factory workshops: press-fitting and forming. In the press-fitting task, users trained the robot to press nails into holes; in the forming task, volunteers trained the robot to evenly push and roll dough-like, rubbery material onto a central rod surface.

Test results showed that the new interface improves training flexibility, expands the types of users and “teachers” who can interact with the robot, and enables the robot to learn a wider range of skills. For example, on a production line, one person could remotely train the robot to handle toxic substances, another could manually operate the robot to pack products, and yet another could use attachments to draw the company logo for the robot to observe and learn from.

R&D Motivation: Building High-Capability “Teammates”

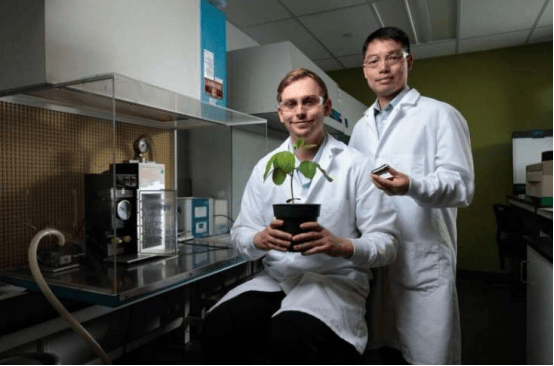

Michael Hagenow, a postdoctoral researcher in MIT’s Department of Aeronautics and Astronautics, stated that the team aims to create highly intelligent and skilled “teammates” that can effectively collaborate with humans to complete complex tasks. They believe that flexible demonstration tools are not only suitable for manufacturing workshops but can also play a greater role in other areas such as homes and caregiving environments, driving increased robot applications. Hagenow will present a detailed paper on the new interface at the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) in October. The paper has also been published on the arXiv preprint server.

Theoretical Foundation: Integrating Emerging Learning Strategies

Shah’s research group at MIT has long focused on developing systems that allow people to teach robots new tasks or skills during work, enabling factory floor workers to quickly and naturally adjust robot actions without halting production for software reprogramming. The team’s new research is based on the emerging strategy of “Learning from Demonstration” (LfD), which enables robots to be trained in a more natural and intuitive way.

Hagenow and Shah reviewed the LfD literature and found that training methods are broadly divided into three categories: teleoperation, kinesthetic training, and natural teaching. For specific people or tasks, one method may be more effective. They therefore envisioned designing a tool that combines all three methods, allowing robots to learn more tasks from more people.

Interface Design: Feature-Rich and Flexible

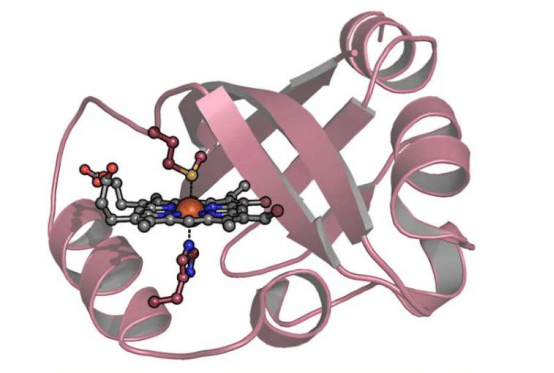

To achieve this goal, the team designed a completely new multi-functional demonstration interface. The interface is a handheld attachment that can be mounted on a typical collaborative robotic arm. It is equipped with cameras and fiducial markers for tracking tool position and motion, as well as force sensors to measure pressure applied during specific tasks.

When the interface is connected to the robot, it enables teleoperation, with the camera recording actions for the robot to learn autonomously. Humans can also physically manipulate the robot through the interface to complete tasks. When detached from the robot, a person can hold the interface to perform tasks, with the camera recording the actions. When reattached, the robot can imitate the task.

User Feedback: Clearly Defining Advantages of Each Method

To test the usability of the attachment, the team brought the interface and collaborative robotic arm to a local innovation center, allowing manufacturing experts to test it. The researchers designed experiments requiring volunteers to complete two tasks using the three training methods. Results showed that volunteers generally preferred the natural method, but manufacturing experts also pointed out that teleoperation is better for training robots to handle hazardous or toxic materials; kinesthetic training helps adjust positioning for robots handling heavy packages; and natural teaching is ideal for demonstrating precise and delicate operations.

Future Outlook: Continuous Improvement and Expanded Applications

Hagenow envisions using this demonstration interface in flexible manufacturing environments, allowing one robot to assist with a series of tasks that benefit from specific types of demonstrations. He plans to improve the attachment design based on user feedback and test robot learning capabilities with the new design. The team believes that by expanding the ways end-users interact with robots during the teaching process through interfaces, collaborative robots can achieve greater flexibility. This innovative achievement is expected to bring new development opportunities to the field of high-end equipment manufacturing and drive broader applications of robotics in more scenarios.