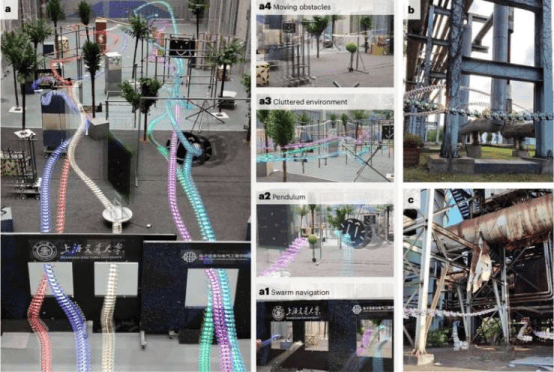

Researchers at Shanghai Jiao Tong University have achieved a major breakthrough in autonomous drone navigation by proposing a new method inspired by the flight capabilities of insects. The findings were published in the journal Nature Machine Intelligence.

While drones are widely used today, most still require manual operation and are prone to collisions in cluttered, crowded, or unknown environments, often relying on expensive and bulky components. The new approach developed by the SJTU team enables multiple drones to autonomously navigate complex environments at high speed.

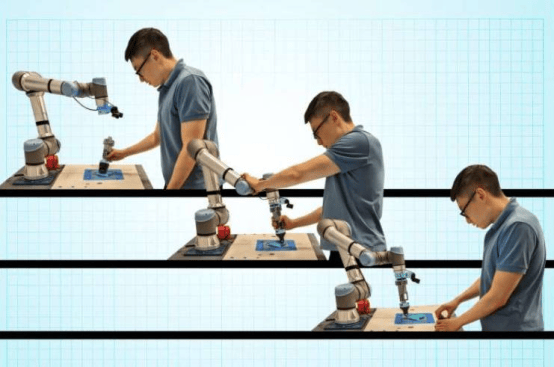

Inspired by the exceptional flight control of tiny insects such as flies, the researchers aimed to replicate their high-performance flight capabilities, which require tightly integrated perception, planning, and control running on extremely limited onboard computing resources. Traditional methods for controlling multiple drones break autonomous navigation tasks into separate independent modules, which can lead to error accumulation, response delays, and increased collision risk.

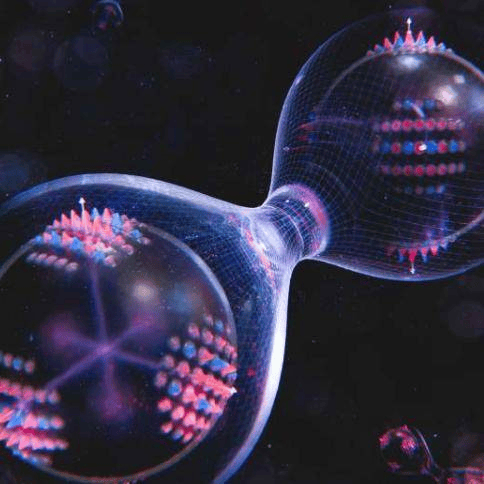

To address this, the team explored lightweight artificial neural networks (ANNs) and replaced classical pipelines with a compact end-to-end policy. The new system relies on a newly developed lightweight ANN that generates control commands for quadrotor aircraft based on ultra-low-resolution 12×16 depth maps. Despite the low input resolution, the network is capable of understanding the environment and planning actions. The network was trained efficiently in a custom simulator, supporting both single-agent and multi-agent training modes.

A key advantage of the method is its use of a highly compact, lightweight deep neural network with only three convolutional layers. The researchers tested it on an embedded computing board priced at just $21, where it ran smoothly and energy-efficiently. Training required only 2 hours, and the system supports multi-robot navigation without centralized planning or explicit communication, enabling scalable deployment.

The team discovered that directly embedding the physical model of the quadrotor into the training process significantly improves both training efficiency and real-world performance. This work challenges the assumption that “more data is always better,” demonstrating that structural alignment and embedded physical priors may be more important than sheer data volume.

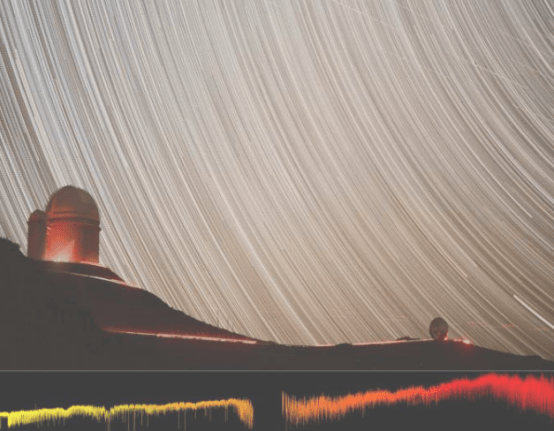

In the future, this method could be applied to a wider range of aircraft types, expanding the capabilities of ultra-lightweight drones for tasks such as automatic selfies, drone racing competitions, live sports broadcasting, search and rescue operations, and warehouse inspections. The research team is currently exploring the use of optical flow instead of depth maps to achieve fully autonomous flight, as well as pursuing interpretability in end-to-end learning systems to further elucidate internal network representations and provide insights into insect behavior.