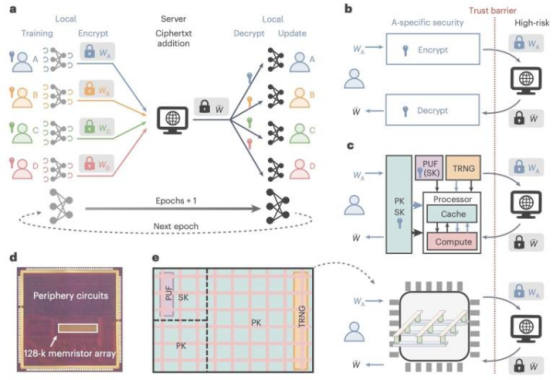

In recent years, the rapid development of machine learning technology has demonstrated enormous potential for artificial intelligence across multiple fields. However, data privacy and security concerns have become increasingly prominent, especially in sensitive sectors such as healthcare and finance. To address this challenge, researchers from Tsinghua University, China Mobile Research Institute, and Hebei University have jointly developed a novel in-memory computing chip designed to improve both the efficiency and security of federated learning.

Federated learning is an innovative machine learning approach that allows multiple users or parties to collaboratively train a shared neural network without exchanging raw data. This method effectively applies AI technology while protecting data privacy. However, traditional federated learning implementations face significant issues in local edge computing, including key generation, error polynomial computation, and the substantial time and energy consumption caused by intensive computation.

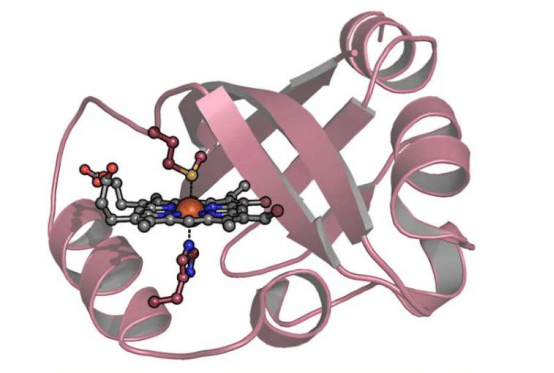

To tackle these challenges, the researchers proposed a new memristor-based in-memory computing chip architecture. Memristors are non-volatile electronic components that can both perform computation and store information, adjusting their resistance based on the history of current flow. Li Xueqi, Gao Bin, and their colleagues detailed the chip's design in their paper, highlighting its ability to dramatically reduce data movement, thereby lowering the energy required for federated learning to collaboratively train artificial neural networks.

The chip not only integrates a physical unclonable function (PUF) for generating secure keys during encrypted communication but also features a true random number generator to provide unpredictable values for encryption. The researchers validated the chip's effectiveness through a case study in which four participants collaboratively trained a two-layer long short-term memory (LSTM) network with 482 weights for sepsis prediction. Test results showed that the chip achieved an accuracy only 0.12% lower than centralized software-based training while significantly reducing both energy and time consumption.

This research underscores the tremendous potential of memristor-based in-memory computing architectures in improving the efficiency and privacy protection of federated learning. Looking ahead, as the technology continues to advance, this chip is expected to collaborate with other deep learning algorithms across a wider range of real-world tasks, driving broader adoption of artificial intelligence technology.