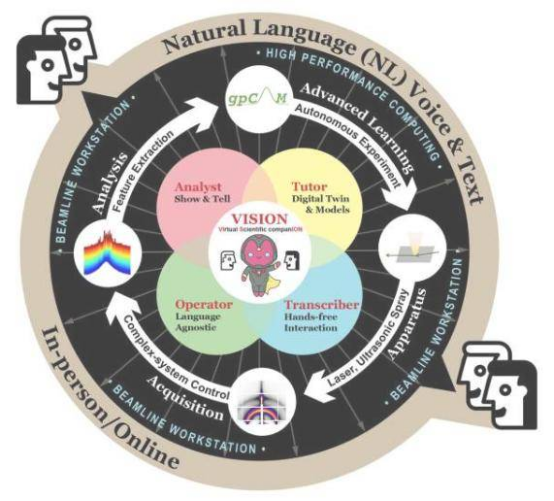

Scientists at the U.S. Department of Energy's Brookhaven National Laboratory have conceived, developed, and tested a new voice-controlled artificial intelligence assistant called Virtual Intelligent Scientific companion (VISION). This innovative tool is designed to help busy scientists perform experiments more efficiently and accelerate the pace of scientific discovery.

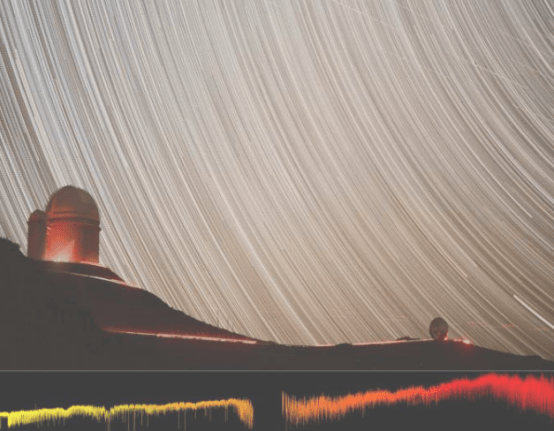

The VISION system was jointly developed by researchers at the laboratory's Center for Functional Nanomaterials (CFN) and experts at the National Synchrotron Light Source II (NSLS-II). By bridging the knowledge gap for complex instruments, it allows scientists to simply tell VISION in plain language what they want to do on an instrument—whether running an experiment, starting data analysis, or visualizing results. This design philosophy saves scientists significant time and dramatically improves experimental efficiency.

In a recent paper published in Machine Learning: Science and Technology, the Brookhaven team shared the details of VISION. Esther Tsai, a scientist in CFN's AI-Accelerated Nanoscience group, said that the impact of AI on science is exciting and an area worth exploring for the scientific community. VISION acts like an assistant that can answer basic questions about instrument performance and operation, providing convenience to scientists.

What sets VISION apart is its use of large language models (LLMs) that can generate text in natural human language, make decisions about operations, and produce the computer code needed to drive instruments. Its internal architecture is organized into multiple "cognitive modules," each containing an LLM that handles a specific task; these modules can be combined into a powerful assistant that works transparently for the scientist.

Users simply walk up to a beamline, speak their request in natural language, and VISION translates the command into code that is sent back to the beamline workstation in seconds. This process greatly simplifies experimental operations, allowing scientists to focus more on the science itself.

As AI technology continues to evolve, VISION's creators are also committed to building a scientific tool that keeps pace with progress. They want VISION to incorporate new instrument capabilities and scale as needed to seamlessly navigate multiple tasks.

The VISION architecture is now complete and is in the active demonstration phase at the CMS beamline. The team's goal is to further test it with beamline scientists and users and eventually bring the virtual AI companion to additional beamlines. The CFN/NSLS-II collaboration has been invaluable, and they are collecting feedback to better understand user needs and support their research.