Over the past few years, generative AI models have continued to evolve in the field of computer science, capable of producing personalized content based on specific inputs or instructions. Although image generation models are now widely used, precise control over the generated images remains a major challenge. At the Computer Vision and Pattern Recognition Conference (CVPR 2025), held in Nashville from June 11 to 15 this year, NVIDIA researchers presented a paper introducing a new machine learning method called DiffusionRenderer, aimed at advancing image generation and editing to enable users to precisely adjust specific attributes of images.

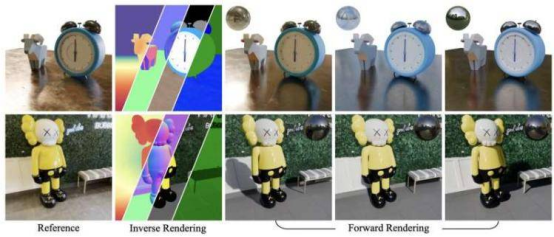

Sanja Fidler, NVIDIA Vice President of AI Research, stated: “Generative AI has made remarkable progress in visual creation, but it has introduced entirely new creative workflows, and controllability remains insufficient.” She pointed out that DiffusionRenderer is designed to combine the precision of traditional graphics pipelines with the flexibility of AI, exploring the development of next-generation rendering technologies that are more accessible and controllable, and that can be easily integrated into existing tools. The new method proposed by Fidler and her team can convert a single 2D video into a graphics-compatible scene representation, allowing users to adjust lighting and materials to generate new content that meets their needs. She emphasized: “DiffusionRenderer is a major breakthrough, simultaneously addressing two key challenges: inverse rendering to extract geometry and materials from real-world videos, and forward rendering to generate realistic images and videos from scene representations.”

What makes DiffusionRenderer unique is that it integrates generative AI into the core of the graphics workflow, making traditionally time-consuming tasks such as asset creation, relighting, and material editing much more efficient. This novel neural rendering method is based on diffusion models, which generate coherent graphics by progressively refining random noise. Unlike previous image generation techniques, DiffusionRenderer first generates G-buffers (geometry buffers), then uses these representations to create new realistic images. Fidler revealed that the team has also made breakthroughs in constructing high-quality synthetic datasets containing precise lighting and materials, which help the model learn accurate scene decomposition and reconstruction.

Looking ahead, DiffusionRenderer holds great promise for robotics researchers and creative professionals. It is highly valuable for content creators in video games, advertising, or film production, allowing high-precision addition, removal, or editing of specific attributes. Computer scientists can also use it to create realistic data for training robotics or image classification algorithms. Fidler added: “It could also have a significant impact on simulation and physical AI, providing diverse datasets for training robots and autonomous vehicles.” The team’s future work will focus on improving result quality, increasing runtime efficiency, and adding features such as semantic control, object synthesis, and more advanced editing tools.

京公网安备 11010802043282号

京公网安备 11010802043282号