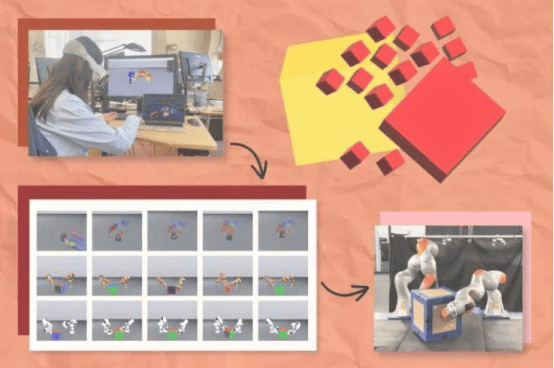

When generative AI models like ChatGPT provide answers, they rely on foundation models trained on massive datasets. Similarly, engineers hope to build such foundation models for robots to master new skills, but collecting and transferring robot training data remains full of challenges. Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and the Institute for Robotics and Artificial Intelligence have developed “PhysicsGen,” a simulation-driven approach that customizes robot training data to help robots efficiently complete tasks.

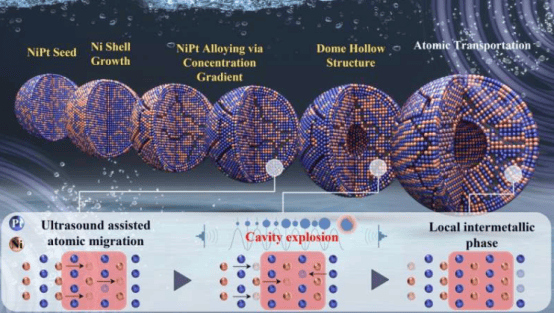

PhysicsGen creates data tailored to specific robots through a three-step process. First, it uses a VR headset to track human actions while manipulating objects and maps these interactions into a 3D physical simulator. Next, these points are remapped onto the 3D model of a specific robot and moved to precise “joints.” Finally, PhysicsGen applies trajectory optimization to simulate the most efficient motions for completing the task, enabling the robot to master the best way to perform operations. Each simulation becomes a detailed training data point, guiding the robot to explore multiple ways of handling objects.

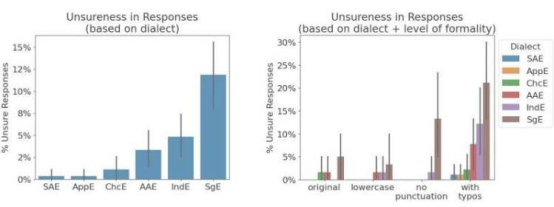

“PhysicsGen does not require humans to re-record demonstrations for every machine; instead, it expands data in an autonomous and efficient way,” said Lujie Yang, PhD student in MIT’s Department of Electrical Engineering and Computer Science and lead author of the project. The system can transform a small number of human demonstrations into thousands of simulated demonstrations, significantly improving the robot’s task execution accuracy. In experiments, digital robots achieved an 81% success rate in rotating blocks to target positions—60% higher than the baseline trained only from human demonstrations. PhysicsGen also improved virtual robotic arm collaboration, enabling two pairs of robots to successfully complete tasks 30% better than the baseline trained solely on human data.

The potential of PhysicsGen extends beyond enhancing existing robot performance; it can convert data from older robots or different environmental designs into instructions suitable for new machines. In the future, PhysicsGen could expand to enable robots to perform diverse tasks by creating a diverse library of physical interactions, helping robots accomplish entirely new tasks. MIT researchers are exploring how to use vast unstructured resources, such as internet videos, as simulation seeds to convert everyday visual content into usable robot data.