Viewed from a distance, the Great Pyramid appears smooth, but up close, it reveals itself as a structure composed of massive limestone blocks stacked in tiers, presenting a stepped profile rather than a gentle slope. This metaphor can be likened to the exponential growth in technological development, which often manifests as a series of breakthrough steps rather than a continuous, smooth curve.

Gordon Moore, co-founder of Intel, proposed in 1965 that the number of transistors on a microchip would double every year, later revised to state that computing power would double every 18 months. Initial CPU performance growth followed this trend, but subsequently, the rate of increase slowed. The growth in computing shifted to the GPU domain, where Nvidia CEO Jensen Huang progressively built a technological foundation through applications in gaming, computer vision, and generative AI.

Technological evolution often goes through sprints and plateaus, and generative AI is no exception. The current wave is driven by the Transformer architecture. Dario Amodei, co-founder of Anthropic, stated: "Exponential growth will continue until it stops. Every year we think, 'Things can't keep growing exponentially'—and every year they do." New paradigms are emerging in the growth of large language models. For example, in 2024, DeepSeek leveraged MoE technology to train efficient models with a relatively modest budget. Nvidia is integrating NVLink technology into its Rubin platform to accelerate MoE model inference and reduce costs.

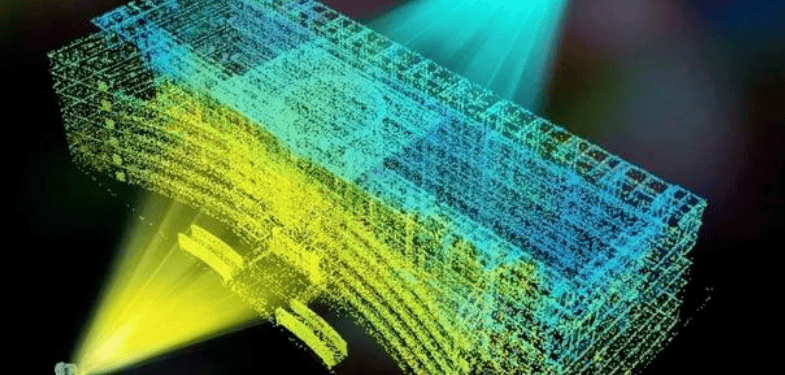

Improving AI inference capabilities faces latency challenges, a role where Groq plays a part with its high-speed inference performance. Combining model architectural efficiency with Groq's throughput can enhance system intelligence levels and reduce user wait times. In the past, GPUs served as general-purpose tools for handling AI tasks. However, as models shift towards complex reasoning, computational demands are changing. Training requires massive parallel processing, while inference demands the rapid sequential generation of tokens. Groq's LPU architecture optimizes memory bandwidth to support real-time inference.

Executive leadership is focused on solving the latency problem in AI "thinking time." For instance, an AI agent might need to generate a large number of internal tokens to verify a task, which could take 20-40 seconds on a standard GPU but be reduced to under 2 seconds on Groq. If Nvidia were to integrate Groq's technology, it could bolster real-time inference capabilities and leverage the CUDA ecosystem's software advantages to provide an efficient platform for both training and running models. By combining this with open-source models like DeepSeek 4, Nvidia has an opportunity to expand its inference business and serve a growing customer base.

AI development resembles a ladder of breakthroughs: GPUs addressed computational speed, the Transformer architecture deepened training, and Groq's LPU accelerates inference thinking. Through strategic positioning, Jensen Huang is propelling Nvidia toward the next generation of intelligent technology.