In the field of high-end equipment manufacturing, innovations in robotics have always been a key force driving industry development. Traditional industrial and hazardous-environment robots are easy to model and control but rigid in texture, making them difficult to operate in narrow spaces and uneven terrain. Soft biomimetic robots, while highly adaptable to environments and capable of accessing hard-to-reach areas, often rely on a series of onboard sensors and custom spatial models for each robot to achieve flexible functions, resulting in high costs and complexity.

Recently, a research team from MIT has achieved a major breakthrough by developing a deep learning control system with low resource requirements, bringing new changes to biomimetic robot control. The findings were published in Nature.

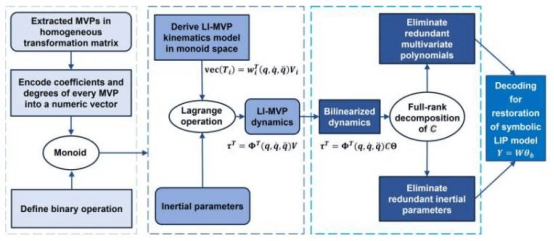

The team abandoned traditional complex approaches and adopted a new method: training a deep neural network on two to three hours of multi-view video of various robots executing random commands, enabling it to reconstruct the robot's shape and range of motion from just a single image. Previously, machine learning control designs required expert customization and expensive motion capture systems, lacking universal control systems, limiting applications and significantly reducing the practicality of rapid prototyping. MIT's new method liberates robot hardware design from manual modeling capabilities, which previously demanded precision manufacturing, expensive materials, extensive sensing capabilities, and reliance on traditional rigid components.

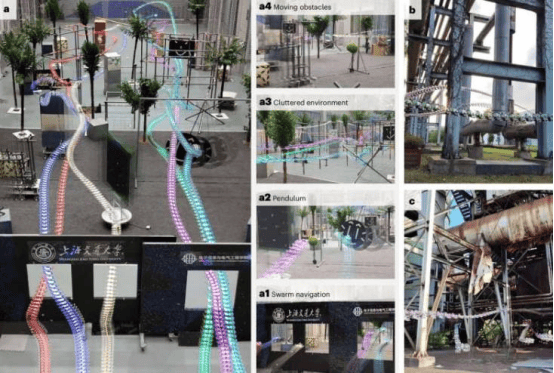

In testing, the new single-camera machine learning method performed excellently. Across various robot systems—including 3D-printed pneumatic hands, soft inflatable wrist joints, 16-DOF Allegro hands, and low-cost Poppy robotic arms—it achieved high-precision control. Joint motion error was less than 3 degrees, fingertip control error less than 4mm (about 0.15 inches), and it could compensate for changes in robot motion and the surrounding environment.

MIT PhD student Sizhe Lester Li noted in a web feature article that this research marks a shift in robotics from programming to teaching. Currently, many robot tasks require extensive engineering and coding; in the future, tasks can be demonstrated to robots, allowing them to learn autonomously how to achieve goals.

However, the system currently relies solely on vision and is not suitable for dexterous tasks requiring contact perception and tactile manipulation; performance also degrades when visual cues are insufficient. The researchers stated that adding tactile and other sensors could enable robots to perform more complex tasks and achieve broader robotic automation control, including those with few or no embedded sensors. This innovation opens new paths for robotics development in high-end equipment manufacturing, poised to propel the industry into a new stage of advancement.