Traditional robots, such as those used in industrial and hazardous environments, are easy to model and control but too rigid to operate in tight spaces and uneven terrain. Soft bionic robots, on the other hand, are better at adapting to environments and performing manipulations in other hard-to-reach places.

However, these more flexible capabilities typically require a suite of onboard sensors and spatial models custom-designed for each individual robot.

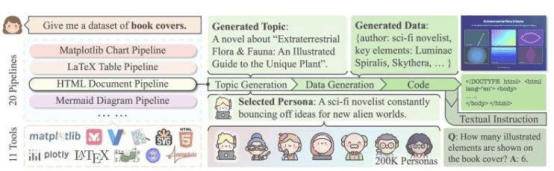

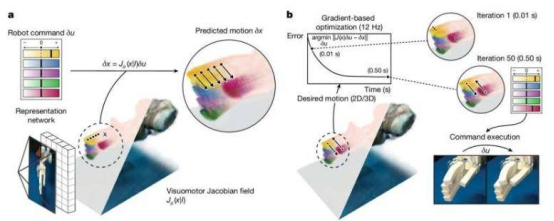

A research team at the Massachusetts Institute of Technology has taken a new, far less resource-intensive approach, developing a much simpler deep learning control system that can teach soft, biologically inspired robots to move and follow commands using just a single image.

Their findings were published in the journal Nature.

By training a deep neural network with two to three hours of multi-view video of various robots executing random commands, the researchers trained the network to reconstruct the robot's shape and range of motion from just one image.

Previous machine learning control designs required expert customization and expensive motion capture systems. Due to the lack of universal control systems, their applications were limited, and the practicality of rapid prototyping was greatly reduced.

The researchers noted in the paper: "Our approach liberates robotic hardware design from the capability of manual modeling, which in the past required precision manufacturing, expensive materials, extensive sensing capabilities, and reliance on traditional rigid components."

The new single-camera machine learning method achieved high-precision control in tests across various robotic systems, including a 3D-printed pneumatic hand, a soft inflatable wrist joint, the 16-DOF Allegro hand, and the low-cost Poppy robotic arm.

These tests successfully achieved joint motion errors of less than 3 degrees and fingertip control errors of less than 4 millimeters (about 0.15 inches). The system was also able to compensate for changes in the robot's motion and the surrounding environment.

MIT PhD student Sizhe Lester Li noted in an online feature article: "This research marks a shift in robotics from programming to teaching."

Today, many robotic tasks require extensive engineering and coding. In the future, we envision showing robots what to do and letting them autonomously learn how to achieve the goals.

Because the system relies solely on vision, it may not be suitable for performing more flexible tasks that require contact sensing and tactile manipulation. Its performance could also degrade in situations with insufficient visual cues.

The researchers stated that adding tactile and other sensors could enable robots to perform more complex tasks. Additionally, it could enable broader robotic automation control, including for those robots with few or no embedded sensors.