A new study led by University of Michigan researchers, published in Energy Conversion and Management: X, has used machine learning to dramatically reduce training time when modeling power adjustments in nuclear microreactors by exploiting their inherent symmetry, bringing automated control of these compact reactors one step closer.

Faster training is critical, enabling researchers to model reactors more quickly and advancing toward real-time autonomous control, an essential capability for operating nuclear microreactors in remote terrestrial locations or future space missions.

Nuclear microreactors are compact systems that can generate up to 20MW of thermal energy, either used directly as heat or converted to electricity, and are easily transportable. They could power long-haul cargo ships that rarely refuel, provide stable carbon-free backup when solar or wind is unavailable, or supply energy in space, either for spacecraft propulsion or onboard systems. Unlike large reactors requiring massive investment, microreactors are cost-effective, and partial automation of their power output control further reduces operating costs. In space, full autonomy is mandatory.

The researchers treated simulated load-following as the first step toward automation. Load-following, adjusting output to match grid demand, is simpler to model than reactor startup, which involves rapid, hard-to-predict transients.

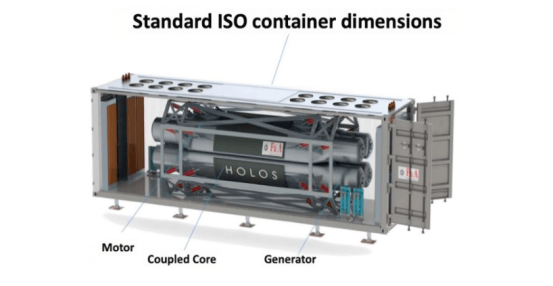

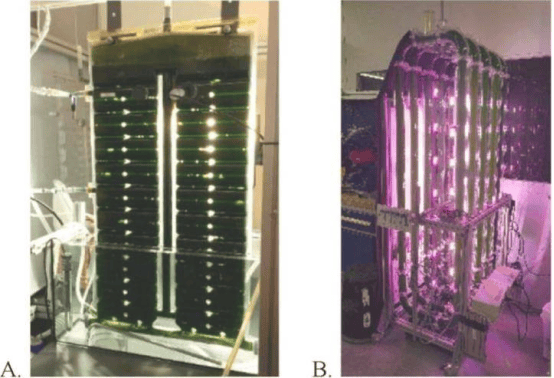

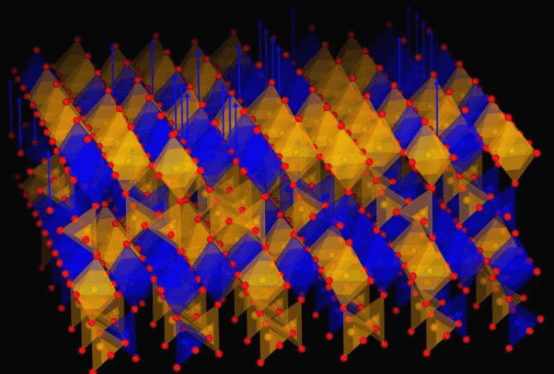

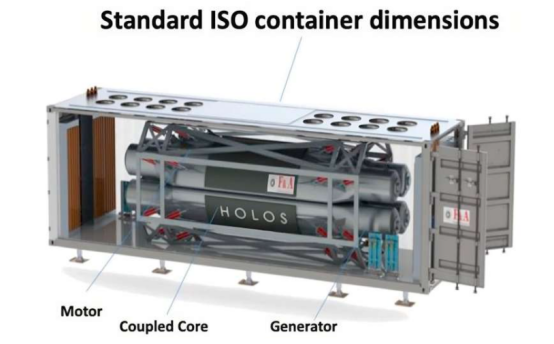

The study simulated the Holos-Quad microreactor design, which regulates power via the position of eight control drums surrounding the central core where neutrons split uranium atoms to produce energy. One side of each drum is lined with neutron-absorbing boron carbide. Rotating drums inward absorbs neutrons and reduces power; rotating outward retains more neutrons in the core and increases power.

Assistant Professor Majdi Radaideh (Nuclear Engineering and Radiological Sciences, University of Michigan), senior author of the study, explains that deep reinforcement learning builds a system dynamics model capable of real-time control, whereas traditional approaches like model predictive control often fail to achieve real-time performance due to repeated optimization.

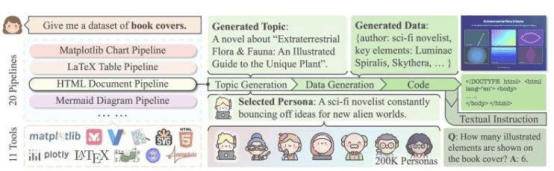

The team used reinforcement learning, in which an agent learns optimal actions through trial-and-error interaction with the environment, while simulating load-following via control drum rotation based on reactor feedback. Although powerful, deep reinforcement learning requires extensive training, increasing computational cost and time.

To address this, the researchers pioneered a multi-agent reinforcement learning approach for the first time in this context. Eight independent agents were trained to control individual drums while sharing full-core information, leveraging the microreactor's symmetry to accelerate learning.

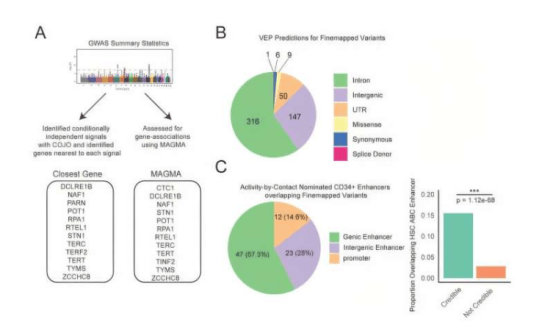

The multi-agent method was benchmarked against two alternatives: a single-agent approach (one agent observes the entire core and controls all eight drums) and industry-standard proportional-integral-derivative (PID) control using feedback loops.

Results showed that reinforcement learning achieved load-following performance comparable to or better than PID. Under imperfect conditions, noisy sensor readings or fluctuating reactor states, reinforcement learning maintained lower error rates than PID while reducing control effort by up to 150%, meaning it found solutions with significantly less drum movement. The multi-agent training was at least twice as fast as the single-agent approach with only marginally higher error.

Although extensive validation under more complex and realistic conditions is still needed before field deployment, the study opens a more efficient path toward reinforcement learning for autonomous nuclear microreactors. Radaideh concludes: "This work is a step toward future digital twins where reinforcement learning will drive system actions. Our next goal is to close the loop with inverse calibration and high-fidelity simulation to further improve control precision."